Featured Refresh

Text & Image

In your view, have recent events changed the way people around the world view your country? If so, can you give any examples?

I think people around the world are exhaling deeply, smiling widely and saying, “ I’m so glad American has got their shit together again. “ Biden and Harris are a sign that the world is righting itself.

January 27, 2021

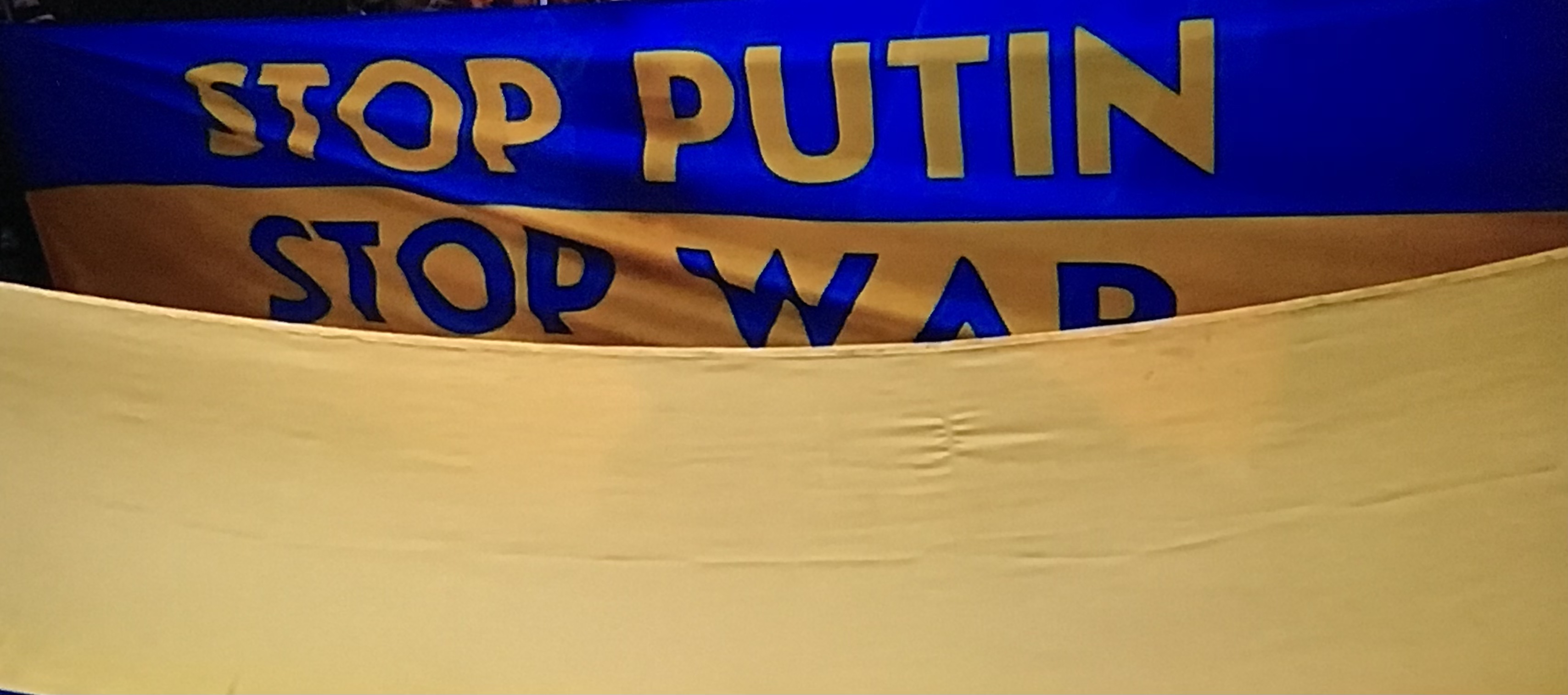

March 5, 2022